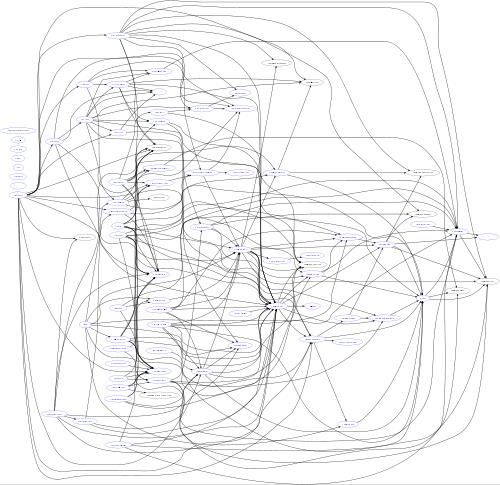

Have you heard of Design by Contract (short DbC)? If not, here is a good introduction from Eiffel. (In short, Design by Contract is one of the major mechanisms to ensure the reliability of object-oriented software. It focuses on the communication between components and requires the interactions to be defined precisely. These specifications are called contracts and they contain Preconditions, Postconditions and Invariants. Unlike using assertions to ensure these conditions, DbC considers the contracts important parts of the design process which should be written first. It is a systematic approach to building bug-free object-oriented systems and helps in testing and debugging.)

Cofoja (Contracts for Java)

Cofoja is a Design by Contract library for Java. It uses annotation processing and byte code instrumentation to provide run-time checking. It supports a contract model similar to that of Eiffel, with added support for a few Java-specific things, such as exceptions. In Cofoja, contracts are written as Java code within quoted strings, embedded in annotations. Here is some sample code (derived from lost icontract library): A basic stack with methods to

push, pop and to see the top element.import java.util.LinkedList;

import com.google.java.contract.Ensures;

import com.google.java.contract.Invariant;

import com.google.java.contract.Requires;

@Invariant({ "elements != null",

"isEmpty() || top() != null" }) // (1)

public class CofojaStack<T> {

private final LinkedList<T> elements = new LinkedList<T>();

@Requires("o != null") // (2)

@Ensures({ "!isEmpty()", "top() == o" }) // (3)

public void push(T o) {

elements.add(o);

}

@Requires("!isEmpty()")

@Ensures({ "result == old(top())", "result != null" })

public T pop() {

final T popped = top();

elements.removeLast();

return popped;

}

@Requires("!isEmpty()")

@Ensures("result != null")

public T top() {

return elements.getLast();

}

public boolean isEmpty() {

return elements.isEmpty();

}

}The annotations describe method preconditions (2), postconditions (3) and class invariants (1). Cofoja uses a Java 6 annotation processor to create .contract class files for the contracts. As soon as Cofoja's Jar is on the classpath the annotation processor is picked up by the service provider. There is no special work necessary.javac -cp lib/cofoja.asm-1.3-20160207.jar -d classes src/*.javaTo verify that the contracts are executed, here is some code which breaks the precondition of our stack:

import org.junit.Test;

import com.google.java.contract.PreconditionError;

public class CofojaStackTest {

@Test(expected = PreconditionError.class)

public void emptyStackFailsPreconditionOnPop() {

CofojaStack<String> stack = new CofojaStack<String>();

stack.pop(); // (4)

}

}We expect line (4) to throw Cofoja's PreconditionError instead of NoSuchElementException. Just running the code is not enough, Cofoja uses a Java instrumentation agent to weave in the contracts at runtime.java -javaagent:lib/cofoja.asm-1.3-20160207.jar -cp classes ...Cofoja is an interesting library and I wanted to use it to tighten my precondition checks. Unfortunately I had a lot of problems with the setup. Also I had never used annotation processors before. I compiled all my research into a complete setup example.

Maven

Someone already created an example setup for Maven. Here are the necessary

pom.xml snippets to compile and run CofojaStackTest from above.<dependencies>

<dependency> <!-- (5) -->

<groupId>org.huoc</groupId>

<artifactId>cofoja</artifactId>

<version>1.3.1</version>

</dependency>

...

</dependencies>

<build>

<plugins>

<plugin> <!-- (6) -->

<artifactId>maven-surefire-plugin</artifactId>

<version>2.20</version>

<configuration>

<argLine>-ea</argLine>

<argLine>-javaagent:${org.huoc:cofoja:jar}</argLine>

</configuration>

</plugin>

<plugin> <!-- (7) -->

<artifactId>maven-dependency-plugin</artifactId>

<version>2.9</version>

<executions>

<execution>

<id>define-dependencies-as-properties</id>

<goals>

<goal>properties</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>Obviously we need to declare the dependency (5). All examples I found register the contracts' annotation processor with the maven-compiler-plugin, but that is not necessary if we are using the Maven defaults for source and output directories. To run the tests through the agent we need to enable the agent in the maven-surefire-plugin (6) like we did for plain execution with java. The Jar location is ${org.huoc:cofoja:jar}. To enable its resolution we need to run maven-dependency-plugin's properties goal (7). Cofoja is build using Java 6 and this setup works for Maven 2 and Maven 3.Gradle

Similar to Maven, but usually shorter, we need to define the dependency to Cofoja (5) and specify the Java agent in the JVM argument during test execution (6). I did not find a standard way to resolve a dependency to its Jar file and several solutions are possible. The cleanest and shortest seems to be from Timur on StackOverflow, defining a dedicated configuration for Cofoja (7), which avoids duplicating the dependency in (5) and which we can use to access its files in (6).

configurations { // (7)

cofoja

}

dependencies { // (5)

cofoja group: 'org.huoc', name: 'cofoja', version: '1.3.1'

compile configurations.cofoja.dependencies

...

}

test { // (6)

jvmArgs '-ea', '-javaagent:' + configurations.cofoja.files[0]

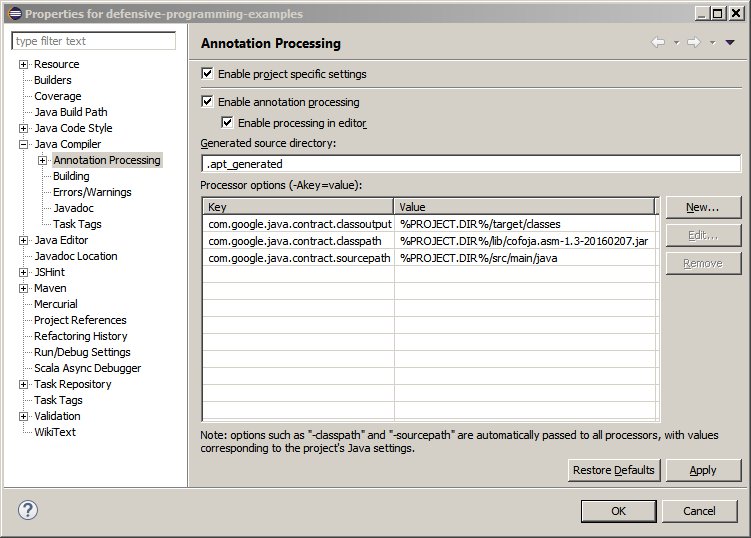

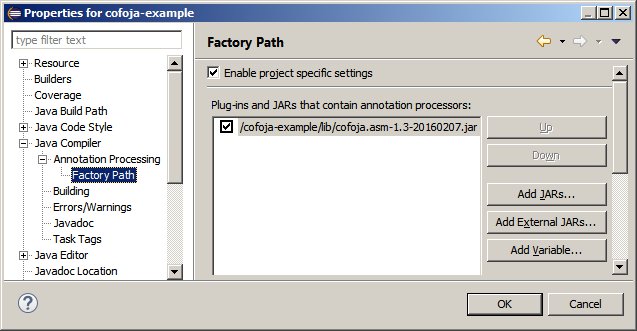

}EclipseEven when importing the Maven project into Eclipse, the annotation processor is not configured and we need to register it manually. Here is the Eclipse help how to do that. Fortunately there are several step by step guides how to set up Cofoja in Eclipse. In the project configuration, enable Annotation Processing under the Java Compiler settings.

Although Eclipse claims that source and classpath are passed to the processor, we need to configure source path, classpath and output directory.

com.google.java.contract.classoutput=%PROJECT.DIR%/target/classes com.google.java.contract.classpath=%PROJECT.DIR%/lib/cofoja.asm-1.3-20160207.jar com.google.java.contract.sourcepath=%PROJECT.DIR%/src/main/java(These values are stored in

.settings/org.eclipse.jdt.apt.core.prefs.) For Maven projects we can use the %M2_REPO% variable instead of %PROJECT.DIR%.com.google.java.contract.classpath=%M2_REPO%/org/huoc/cofoja/1.3.1/cofoja-1.3.1.jarAdd the Cofoja Jar to the Factory Path as well.

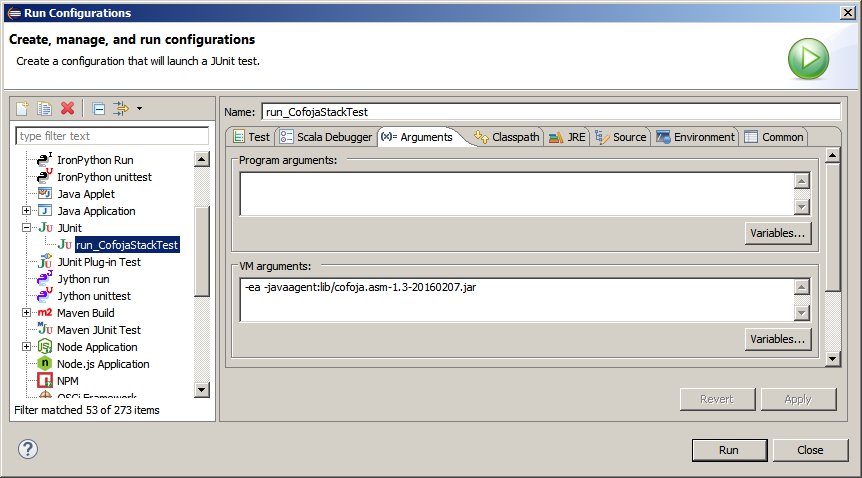

Now Eclipse is able to compile our stack. To run the test we need to enable the agent.

IntelliJ IDEA

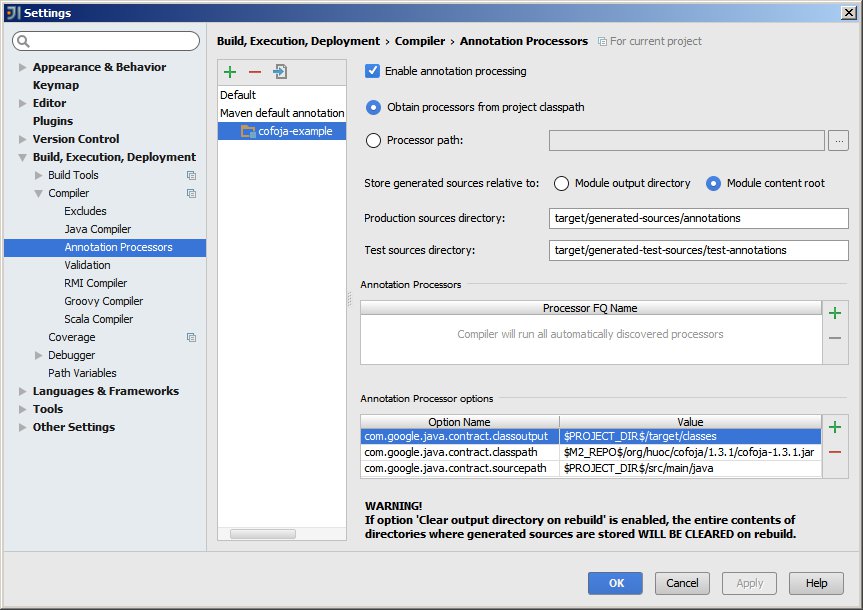

StackOverflow has the answer how to configure Cofoja in IntelliJ. Enable annotation processing in Settings > Build > Compiler > Annotation Processors.

Again we need to pass the arguments to the annotation processor.

com.google.java.contract.classoutput=$PROJECT_DIR$/target/classes com.google.java.contract.classpath=$M2_REPO$/org/huoc/cofoja/1.3.1/cofoja-1.3.1.jar com.google.java.contract.sourcepath=$PROJECT_DIR$/src/main/java(These values are stored in

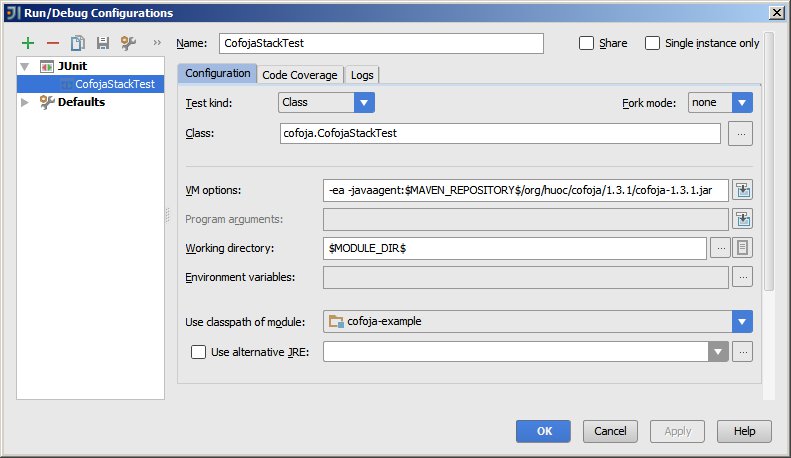

.idea/compiler.xml.) For test runs we enable the agent.

That's it. See the complete example's source (zip) including all configuration files for Maven, Gradle, Eclipse and IntelliJ IDEA.