This SoCraTes experience broke the ice with Scheme, i.e. it gave me enough exposure to a new language and enabled me to continue on my own. For the next two years I kept playing with Scheme, experimenting with code katas, e.g. the Bank-OCR Kata, porting well known exercises like Parrot, Gilded Rose and Game Of Life and I even solved some Project Euler problems using Scheme.

This SoCraTes experience broke the ice with Scheme, i.e. it gave me enough exposure to a new language and enabled me to continue on my own. For the next two years I kept playing with Scheme, experimenting with code katas, e.g. the Bank-OCR Kata, porting well known exercises like Parrot, Gilded Rose and Game Of Life and I even solved some Project Euler problems using Scheme.SICP

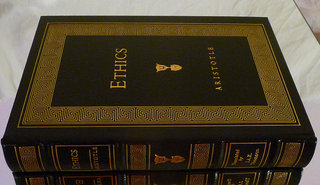

Earlier this year I started reading SICP. SICP stands for Structure and Interpretation of Computer Programs, a computer science text originally used in introductory courses taught at the MIT in the eighties. It had been on my reading list since 2010, when Uncle Bob recommended it in one of his Clean Coder videos. SICP had been recommended again and again and I was very happy to find the time and energy to read it.

SICP is one of the greatest books - if not the greatest book - I have ever read. It is fast paced and written in the style of all scientific books - too dense and hard to follow. Reading it is hard work. I used to like such books when I was studying applied mathematics many years ago - actually I was eating such books for breakfast ;-) The high density gives me new information fast. As I like to read books from cover to cover, I do not mind that the information is not accessible directly. Yes it is challenging to read. And it is very insightful. I appreciate most the skill of the authors to define abstractions. I am stunned again and again how they name their composed methods.

The Scheme Programming Language

SICP is strongly related to Scheme as it is one of the bibles of the Lisp/Scheme world. SICP uses Scheme for its code samples and exercises as Gerald Jay Sussman, one author of SICP, is also one of the creators of Scheme. Scheme is a version of Lisp and as such a divine language per se. It is a great language and unlike Lisp it follows a minimalist design philosophy. I really like its simplicity: There are no reserved words, not much syntax, just atoms, pairs and lists.

(define atom?

(lambda (x)

(and (not (pair? x))

(not (null? x)))))Atoms are numbers, strings, booleans, characters and symbols. Symbols i.e. names usually represent other atoms or functions. Maybe you do not like all the extra parenthesis, but it is compact and uniform. Because of the uniformity of the S-expression, it is easy to create parsers, embedded languages and even full featured Schemes, e.g. in Python, Haskell or any language. (To be fair, the last link contains implementations of Lisp not Scheme in 73 languages.)History

As I said before Scheme is based on Lisp. The Lisp programming language was created 60 years ago by John McCarthy. And Lisp is very special, it seems to transcend the utilitarian criteria used to judge other languages. In 1975 Scheme was created by Gerald Jay Sussman and Guy Lewis Steele. Watch this great talk by Guy Steele about issues associated with designing a programming language.

Standards

StandardsOne thing which confused me a lot when I started playing with Scheme were its versions. The Scheme language is standardised by IEEE and the de facto standard is called the Revised

n Report on the Algorithmic Language Scheme (RnRS). The most widely implemented standard is R5RS from 1998. I use that mainly because the first Scheme implementation I downloaded was an R5RS compliant one. R5RS files use the .scm filename extension. The newer R6RS standard, ratified in 2007, is controversial because it departs from the minimalist philosophy. It introduces modularity which is breaking everything. Being used to the strong compatibility of Java or at least the major compatibility of Python I did not expect versions to be incompatible at all. R6RS files use the .ss, .sls and .sps filename extensions. The current standard is R7RS from 2013, a smaller version, defining a subset of the large R6RS version retaining the minimalism or earlier versions.(Almost) Complete List of Special Forms and Functions

I did not read any tutorials or books on the Scheme language - I was exploring the language on my own. Still I wanted to know which library functions I could use. Similarly I used to list all classes available in each Java release. I searched for an exhaustive list of Scheme functions. There are many Schemes and I did not want to depend on any implementation specific extensions. In the end I decided to scrape the standards.

Scheme makes a difference between forms and functions. Function calls evaluate all arguments before control is passed to the function body. If some expressions should not be evaluated - as in

if or switch (which is cond in Scheme) - a special form is needed. Special forms evaluate their arguments lazily. For more information see Why is cond a special form in Scheme.R5RS Forms and Functions

I scraped the list of special forms and built-in functions from the R5RS HTML documents. The list is incomplete since I had to rely on formatting of the document. It misses

define and the abbreviations like ' and @, but looks pretty good (to me) otherwise. Browsing the 193 forms and functions gives an idea of built in data types, i.e.boolean char complex (number) exact (number) inexact (number) list number pair procedure rational (number) string symbol vectoras well as possible conversions between them available in any R5RS compliant Scheme.

char->integer exact->inexact inexact->exact integer->char list->string list->vector number->string string->list string->number string->symbol symbol->string vector->list

R6RS Forms and Functions

R6RS Forms and FunctionsAs mentioned earlier, R6RS is much larger than R5RS. It defines 630 forms and functions, most of them in the (new) libraries. The standard separates built-in forms and functions from the ones defined in libraries. (This is still very small, considering that the Java 8 core library contains 6000 public classes.) My list of forms and functions contains all, built-in and library alike. It looks complete, but I am not sure, I did not work with R6RS. From a quick glance R6RS adds bitwise and floating point operations as well as different types of byte vectors,

enum-set and hashtable. When looking at my list now, I see that I should have scraped the names of the library modules as well. (I added that to my task list for 2019 ;-)Scheme Requests for Implementation

Next to the RnRS standards, there are the SRFIs, a collection of concrete proposals and reference implementations. People implementing Scheme chose to implement SRFIs or not. Most Schemes support some of then, see Arthur A. Gleckler's report of SRFI support by Scheme implementations in 2018. Some Schemes have package managers which allow to download and instal packages. Usually some of those are SRFI implementations. I guess I also need to scrape these.

Conclusion

Scheme (Lisp) is so much fun, especially when you do not have to deliver anything. Most people I meet got in touch with Lisp during university but never followed up. Some are even afraid of it. Since 2016 I run Scheme coding sessions at every unconference or SoCraTes event I attend. I invite people to mob with me and I do all the typing. We always have a good time, and - after some warm up with Scheme - people really like it. They all enjoy the opportunity to dive into Lisp again. Will you?

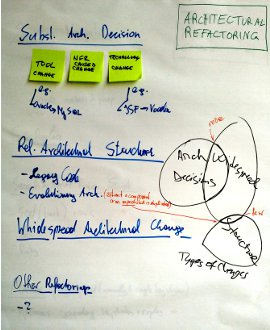

1.1. Tool Change

1.1. Tool Change

All of them do not change the overall structure, they just work on the implementation. Often

All of them do not change the overall structure, they just work on the implementation. Often