We lined up with the local Java Student User Group and started inviting speakers. To our surprise there was absolutely no problem to find speakers and even sponsors. 200 emails later we ended up with 10 presentations and 8 sponsors (beside the Eclipse Foundation which sponsors all demo camps).

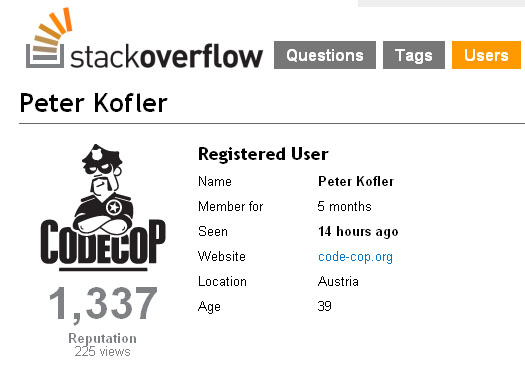

We lined up with the local Java Student User Group and started inviting speakers. To our surprise there was absolutely no problem to find speakers and even sponsors. 200 emails later we ended up with 10 presentations and 8 sponsors (beside the Eclipse Foundation which sponsors all demo camps).When Jeff and Chris of EclipseSource offered a presentation, we were delighted but had to drop our own talks about Free Quality and Code Metric Plugins and Xtext NG to provide some space for these well known Eclipse veterans. (So, as usually there was no time for code quality topics ;-) Interest of people to attend as well as give a speech was quite high. I'm sure we could have added a second track but did not want to because of Bjorn Freeman-Benson's recommendation for Eclipse X Days.

JSUG had organised the room and infrastructure for us. The enthusiasts of the core team helped the speakers prepare their stuff while I was giving them our Eclipse DemoCamp shirts. When the presentation started there were more than 80 people in the room. I had the pleasure to welcome them and present the agenda. Although I only had ten slides I was very nervous. It was the first time I spoke in front of that many people.

Werner Keil started with an introduction of the Spatio-Temporal Epidemiological Modeler (STEM). He talked about its general application and importance. In the end he played an interesting video showing simulated infection rates throughout the world.

Werner Keil started with an introduction of the Spatio-Temporal Epidemiological Modeler (STEM). He talked about its general application and importance. In the end he played an interesting video showing simulated infection rates throughout the world. Next the Lixto development team presented some features of their RCP based screen scraper and how they used JFace components to render the different GUIs of the Lixto Visual Developer application.

Next the Lixto development team presented some features of their RCP based screen scraper and how they used JFace components to render the different GUIs of the Lixto Visual Developer application. The Eclipse plugin ReviewClipse was presented by Christoph Mayerhofer. It's a useful tool to make code reviews easier and thus more likely to be done. Obviously a code cop has to love it. Currently I'm reading all diffs from junior developers every morning. So I'll give it a try.

The Eclipse plugin ReviewClipse was presented by Christoph Mayerhofer. It's a useful tool to make code reviews easier and thus more likely to be done. Obviously a code cop has to love it. Currently I'm reading all diffs from junior developers every morning. So I'll give it a try. Chris Aniszczyk and Jeff McAffer are both seasoned speakers and furthermore good entertainers. In a whirlwind tour of the Toast demo application they showed what you can do with EclipseRT technologies. Believe me, it's cool stuff.

Chris Aniszczyk and Jeff McAffer are both seasoned speakers and furthermore good entertainers. In a whirlwind tour of the Toast demo application they showed what you can do with EclipseRT technologies. Believe me, it's cool stuff. The last presentation of the first block was about e4, the future platform of Eclipse, given by well known Eclipse committer Tom Schindl. Tom is probably one of the most motivated Eclipse enthusiasts. You can feel the fire burning in him when he's talking about his work, very stimulating. And he hates singletons as much as I do. Good boy.

The last presentation of the first block was about e4, the future platform of Eclipse, given by well known Eclipse committer Tom Schindl. Tom is probably one of the most motivated Eclipse enthusiasts. You can feel the fire burning in him when he's talking about his work, very stimulating. And he hates singletons as much as I do. Good boy.Half an hour planned break was way too short to eat 400 sandwiches together with water, Red Bull and smoothies. Michael even went to fetch some beer because Chris had written that he is looking forward to Austria and the Austrian Stiegl Bier. People were enjoying the sandwiches, standing together in small groups and chatting away. Unfortunately I didn't have time to talk to everybody I knew.

Our "modelling track" was opened by Robert Handschmann's demo of the Serapis language workbench. It looked mature and makes model driven development quite easy. I liked most that Robert was able to answer all questions regarding additional features by simply showing the feature in the workbench.

Our "modelling track" was opened by Robert Handschmann's demo of the Serapis language workbench. It looked mature and makes model driven development quite easy. I liked most that Robert was able to answer all questions regarding additional features by simply showing the feature in the workbench. After that Maximilian Weißböck explained the basics of Model Driven Software Development and showed how easy it's to add stuff when you have a working modelling solution. He finished with the advice to always use a modelling approach because the tools (read Xtext) are mature enough to pay off even for small projects.

After that Maximilian Weißböck explained the basics of Model Driven Software Development and showed how easy it's to add stuff when you have a working modelling solution. He finished with the advice to always use a modelling approach because the tools (read Xtext) are mature enough to pay off even for small projects. Next Karl Hönninger gave a short demo of openXMA, a RIA technology based on EMF and SWT. Now XMA is not your 'new and sexy' technology, but it's 'improving'. The new openXMA DSL is based on Xtext and combines domain and presentation layer modelling. Karl used a series of one minute screencasts to demo the XMA Eclipse tool chain. That's a good idea to make sure that the demos work and still to be flexible enough to skip parts of the demo.

Next Karl Hönninger gave a short demo of openXMA, a RIA technology based on EMF and SWT. Now XMA is not your 'new and sexy' technology, but it's 'improving'. The new openXMA DSL is based on Xtext and combines domain and presentation layer modelling. Karl used a series of one minute screencasts to demo the XMA Eclipse tool chain. That's a good idea to make sure that the demos work and still to be flexible enough to skip parts of the demo. Then Florian Pirchner showed how they had created dynamic RCP Views enriched with Riena Ridgets that interpret their EMF model and update in real-time: Riena-EMF-Dynamic-Views. Redview seemed like magic to me (either that or I was already getting tired ;-).

Then Florian Pirchner showed how they had created dynamic RCP Views enriched with Riena Ridgets that interpret their EMF model and update in real-time: Riena-EMF-Dynamic-Views. Redview seemed like magic to me (either that or I was already getting tired ;-). Unfortunately I was not able to attend the last presentation given by Philip Langer about model refactoring by example. Michael and I were busy preparing to move on to the chosen "beer place". In the end approx. 30 people made it there. In the nice atmosphere of our own room we had some beer and vivid discussions. For example I have some (blurred) memories of Michael showing slides of some Xtext presentation on his notebook. The evening ended when the waiter threw us out half an hour past closing time (midnight).

Unfortunately I was not able to attend the last presentation given by Philip Langer about model refactoring by example. Michael and I were busy preparing to move on to the chosen "beer place". In the end approx. 30 people made it there. In the nice atmosphere of our own room we had some beer and vivid discussions. For example I have some (blurred) memories of Michael showing slides of some Xtext presentation on his notebook. The evening ended when the waiter threw us out half an hour past closing time (midnight).Organising a demo camp is work. But it's also fun. And obviously it paid off. It was great. Thank you everybody for making it such a nice evening. Maybe we'll see each other again next year.

It has been more than half a year now that I have left Herold for good. In the end work there got quite boring and some things really sucked. But after staying there for five years the development team became my family. My bond with my colleagues kept me from leaving earlier and made it a really hard decision when the time for something new had finally come. I still remember them and from time to time I suffer moments of painful memories. I do not know how it came to be like that. There were no activities together, no common hobbies to talk about, even no geek interests to compete in. We did not meet after work or go out for a beer together, at least not more than once or twice a year.

It has been more than half a year now that I have left Herold for good. In the end work there got quite boring and some things really sucked. But after staying there for five years the development team became my family. My bond with my colleagues kept me from leaving earlier and made it a really hard decision when the time for something new had finally come. I still remember them and from time to time I suffer moments of painful memories. I do not know how it came to be like that. There were no activities together, no common hobbies to talk about, even no geek interests to compete in. We did not meet after work or go out for a beer together, at least not more than once or twice a year.