During COVID lockdown, I worked remotely. I did all my Code Cop things, e.g. pair programming, code reviews, coding workshops, just in a remote way. All these tasks involved other people and I was severely affected by my "remoteness". After one year of remote work, one of my clients ran an in-house knowledge sharing on how to do that successfully. They invited me and I thought about the advice I would give. What would be my top three tips for remote work?

During COVID lockdown, I worked remotely. I did all my Code Cop things, e.g. pair programming, code reviews, coding workshops, just in a remote way. All these tasks involved other people and I was severely affected by my "remoteness". After one year of remote work, one of my clients ran an in-house knowledge sharing on how to do that successfully. They invited me and I thought about the advice I would give. What would be my top three tips for remote work?1. Check-in

When working remotely, the first and foremost thing I use are check-ins. During a check-in everybody says a few words how he or she is here - as a human being. The check-in is not about your role, your expectations or current work. It is about how you feel. When we work separately, I miss your face and body language and we need to be explicit how we are. In a face to face situation I might be able to recognise if you are tired or distracted or upset. I am unable to identify the little hints when you are just a small image on my screen. Now I place more emphasis on the human and emotional side of my peers because it is missing in a remote setting. Some people always reply with "I am OK" and I am fine with that. Nobody has to share. Some people keep talking and I have to remind them what the check-in is about and stop them from taking all the space. Conversation, arguing or discussion break the check-in. And at the end of the meeting I ask everybody to check-out, which is the opposite, asking how they are leaving as human beings.

2. Be Professional

I preach being professional. After all "I help development teams with Professionalism" (as written on the second introduction slide of all of my decks). Professionalism is an attitude, it is how you do your job. This includes also your appearance. In a remote setting, your appearance is the image of your face, the sound of your voice and the background behind your head. There are several implications: Your camera is always on. You are in the centre of your camera's focus and the camera is ideally placed on top of your screen where you are looking at from time to time. On the other hand, when only half of your face is visible, or your face is distorted in some way, it is hard to see and to connect. In communication theory, as the initiator if the exchange, the sender is responsible for the message to be received. Much of your message is conveyed by your voice. For the best audio, use a headset with a microphone so your team mates have a change of hearing you. Next I recommend a professional background. Best is a uniform wall or board. In the beginning of remote work, I hung a blanket between two window handles to provide a uniform, non distracting background during my video calls. Non uniform backgrounds are distracting and make it harder to focus on the face of the person speaking. A Green Screen background helps if you have no option to work in front of a wall or door. Please choose a neutral image with soft colours. Most Green Screen backgrounds are unsuitable.

3. Strive for the same Level of Collaboration

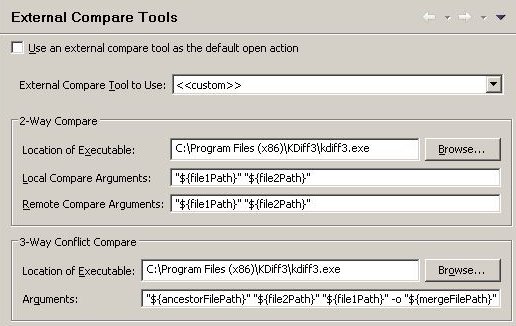

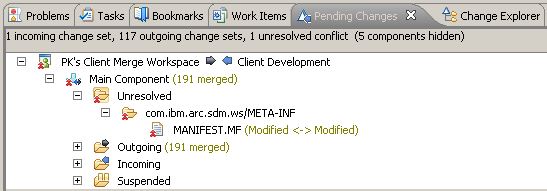

3. Strive for the same Level of CollaborationIt is harder to collaborate under remote working conditions. For example pair programming is more complicated. Now I do not accept less collaboration than before remote work. There are options and tools to overcome the barrier. Ten years ago, in 2012, I already used tools as TeamViewer or AnyDesk to control the machine of my remote pairing partner. I spent many hours working like that and it worked well. Modern tools I have used include Code With Me, which works with the JetBrains family of IDEs, Visual Studio Live Share, which you can even join with a browser, Floobits, CodeTogether and mob.sh. If you are blocked by remote work, push for some solution to keep in touch and do what you used to do. Do not accept less collaboration than in face to face situations.

We need to keep in touch

After I had put together my three tips, I was surprised. I had expected some technology or tool but all three tips are about connecting. Then it is not a surprise after all, because remote work first and foremost affects collaboration. Most advice of the other presenters was aligned with that. Here are some examples: When working closely with a team you need to keep in touch. You should speak with your team members often, probably talk daily. You should also meet outside work, e.g. remote game events like online escape room. Meeting in person is even better, e.g. visiting each other or visiting the office once in a while.

Video Conference All Day

A team lead shared his story how they had managed transition to remote work. The team used to talk a lot during the day. When remote work started, they kept a video call running. Whenever people would work, they would be in the call, unless they were in a meeting with someone. Many team members used a second device, e.g. a tablet, for this ongoing call. They felt like being in the office and whenever someone wanted to talk, she could talk to the people in the room. Using the video conference open all day, the team kept up the good vibe while fully working remotely.

I am curious about your tips to improve remote collaboration. If you have any, I invite you to comment.

All of them do not change the overall structure, they just work on the implementation. Often

All of them do not change the overall structure, they just work on the implementation. Often